This repository contains the EQUATE dataset, and the Q-REAS symbolic reasoning baseline[1].

EQUATE (Evaluating Quantitative Understanding Aptitude in Textual Entailment) is a new framework for evaluating quantitative reasoning ability in textual entailment.

EQUATE consists of five NLI test sets featuring quantities. You can download EQUATE here. Three of these tests for quantitative reasoning feature language from real-world sources

such as news articles and social media (RTE, NewsNLI Reddit), and two are controlled synthetic tests, evaluating model ability

to reason with quantifiers and perform simple arithmetic (AWP, Stress Test).

| Test Set | Source | Size | Classes | Phenomena |

|---|---|---|---|---|

| RTE-Quant | RTE2-RTE4 | 166 | 2 | Arithmetic, Ranges, Quantifiers |

| NewsNLI | CNN | 968 | 2 | Ordinals, Quantifiers, Arithmetic, Approximation, Magnitude, Ratios, Verbal |

| RedditNLI | 250 | 3 | Range, Arithmetic, Approximation, Verbal | |

| StressTest | AQuA-RAT | 7500 | 3 | Quantifiers |

| AWPNLI | Arithmetic Word Problems | 722 | 2 | Arithmetic |

Models reporting performance on any NLI dataset can additionally evaluate on the EQUATE benchmark,

to demonstrate competence at quantitative reasoning.

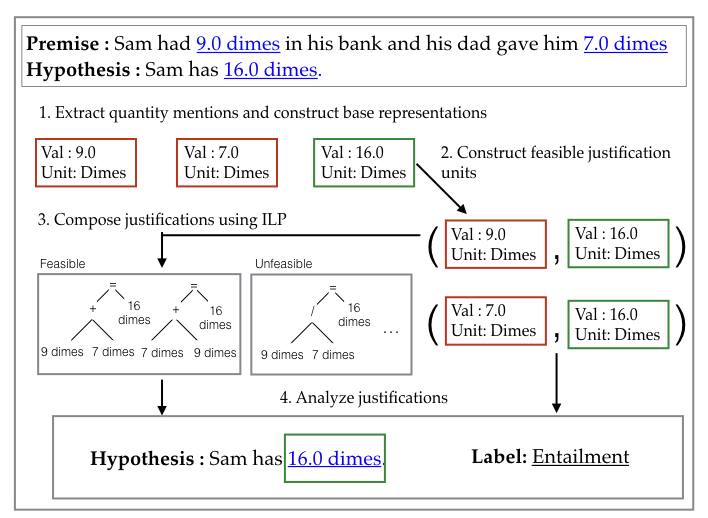

We also provide a baseline quantitative reasoner Q-Reas. Q-Reas manipulates quantity representations symbolically to make entailment decisions.

We hope this provides a framework for the development of hybrid neuro-symbolic architectures to combine the strengths of symbolic reasoners and

neural models.

Q-Reas has five modules:

- Quantity Segmenter: Extracts quantity mentions

- Quantity Parser: Parses mentions into semantic representations called NUMSETS

- Quantity Pruner: Identifies compatible NUMSET pairs

- ILP Equation Generator: Composes compatible NUMSETS to form plausible equation trees

- Global Reasoner: Constructs justifications for each quantity in the hypothesis,

analyzes them to determine entailment labels

You can run Q-Reas on EQUATE with the following command:

python global_reasoner.py -DATASET_NAME (rte, newsnli, reddit, awp, stresstest)

Q-Reas consists of the following components:

- Quantity Segmenter: quantity_segmenter.py (uses utils_segmenter.py)

- Quantity Parser: numerical_parser.py (uses utils_parser.py)

- Quantity Pruner: numset_pruner.py

- ILP Equation Generator: ilp.py

- Global Reasoner: global_reasoner.py (uses utils_reasoner.py, scorer.py, eval.py)

and utilizes the following data structures:

- numset.py: Defines semantic representation for a quantity

- parsed_numsets.py: Stores extracted NUMSETS for a premise-hypothesis pair

- compatible_numsets.py: Stores compatible pairs of NUMSETS

Please cite [1] if our work influences your research.

EQUATE: A Benchmark Evaluation Framework for Quantitative Reasoning in Natural Language Inference (CoNLL 2019)

[1] A. Ravichander*, A. Naik*, C. Rose, E. Hovy EQUATE: A Benchmark Evaluation Framework for Quantitative Reasoning in Natural Language Inference

@article{ravichander2019equate,

title={EQUATE: A Benchmark Evaluation Framework for Quantitative Reasoning in Natural Language Inference},

author={Ravichander, Abhilasha and Naik, Aakanksha and Rose, Carolyn and Hovy, Eduard},

journal={arXiv preprint arXiv:1901.03735},

year={2019}

}

Leave a Reply